In the synthesizer world, MIDI velocity sensitivity is what I would call a “default feature“: a no-brainer to implement if you make software synths, samplers, instruments.

People want their musical performances to sound… human. Being able to vary the loudness of each note is a good first step. MIDI velocity is a way to encode that loudness value in a number from 0 to 127. So yeah… it’s a strange and small range (less than a byte!) but it’s one of those quirky things that all digital musicians end familiar with (even if they don’t understand why).

And perhaps because it’s a default feature, MIDI velocity implementations can feel like an afterthought. You can almost hear devs saying “lets check this off the list and get to the good stuff.”

That’s the thesis I’m kicking this article off with, at least 🙂

The background: I own a lot of software and hardware synths. I’m building a software synth. I learn most when I try to say the quiet parts out loud. So I thought I’d document an example of “doing my homework” on a particular sub-topic, in this case, MIDI velocity.

Confession

I’ve been building an additive synth for just about 4 years now and I still haven’t added velocity sensitivity.

I just feel meh about it! I’m saying this as someone with years of studying and playing piano. I play my Wurlitzer 200A electric piano daily. If anyone should advocate for better keyboard control, it should be me!

Instead, I just end up using my OP-1 as my controller. It doesn’t have a velocity sensitive key bed (The OP-1 field does and apparently is decent!). So I just edit the velocity values manually, as needed.

Sure, a lot of the time I’m doing dev or sound design. So, I don’t really need more than Note On and Note Off.

But even as a musician playing my own synth, I haven’t felt the need for velocity as a feature. I’m finding (and liking) other ways to express loudness and modulate dynamics.

Maybe I’m procrastinating? But the truth is, I don’t have great velocity experiences using other software synths and samplers. I would summarize the MIDI velocity as “good in theory, cumbersome in practice.”

And why add a feature that will just be… meh?

Related: I did finally add another default feature, pitch bend. It was…. 2 lines of code and took 5 min. Well, the prototype version…

Default Features

In synths, a “default feature” such as velocity seems to end up with one of two implementations: “the dumb obvious thing” or “pawn the complexity off on the user.”

In other words, devs are most likely to 1) copy and paste implementations they like or 2) engineer flexible bulk implementations such as curve editors and modulation matrices with routing.

Toss them pesky parameters in a bucket and let the user deal with ’em!

Synth devs everywhere

Don’t get me wrong. Solutions like a mod matrix work great for sound design experts who want flexibility über alles. But complexity comes at a usability and playability cost for many users: when everything is a choice, it’s harder to know what the good choices are.

Also, despite the percieved flexibility of something like a modulation matrix, linking one parameter to modulate another is only one type of relationship (even if you can adjust the curve, range, direction, etc)

There are infinite other relationships available. An example: an algorithm that takes into account MIDI velocity, where notes fall on the keyboard, whether or not it’s chords or a single line being played, etc… or… just anything non-linear?…

So while both devs and sound designers may be “happy enough” with (or overwhelmed by) solutions like curve editors and modulation matrixes — are actually sophisticated relationships under-explored? Could this meh default feature be… better?

First Principles and Player Intention

Let’s role play as my favorite character, Someone Who Knows Better: Innovation and exploration around first principles have stagnated! Everyone is just doing the default thing! Checking features off the list! Copying other implementations with only minor variations! There’s unexplored territory here! And lots of exclamation marks!!

What does “first principles” even mean re: MIDI Velocity?

Velocity is a MIDI value that accompanies every Note On. The values come “live” from a keyboard or from manually inputting notes. (Note Off also has a velocity component which maps to release time, but we’ll ignore that for now).

When played live, velocity can be seen as a signal that carries player intention.

This isn’t always the case, though — someone might just be entering in a few chords and will later normalize and adjust the velocity in the software.

Also to say the quietest part about MIDI velocity out loud: it’s actually really hard to hit consistent or intended values (and software instruments tend to be less forgiving of this than acoustic ones).

For direct entry into a piano roll, the values are arbitrary and will just be the DAW default. Sure, they might be edited later to add expression. We’ll never know!

So already we run into a potential problem — there are times where there are very specific player intentions of expression and other times where there are no intentions or it’s just DAW defaults — in all cases, the instrument should sound good.

How is velocity calculated?

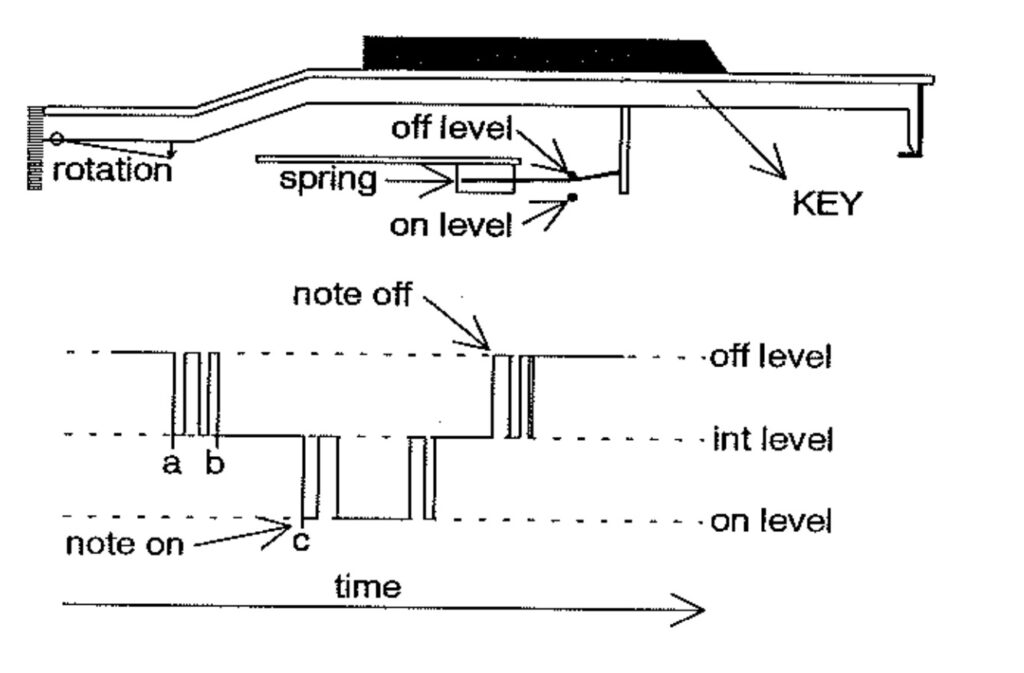

Velocity is literally how fast you press a physical key.

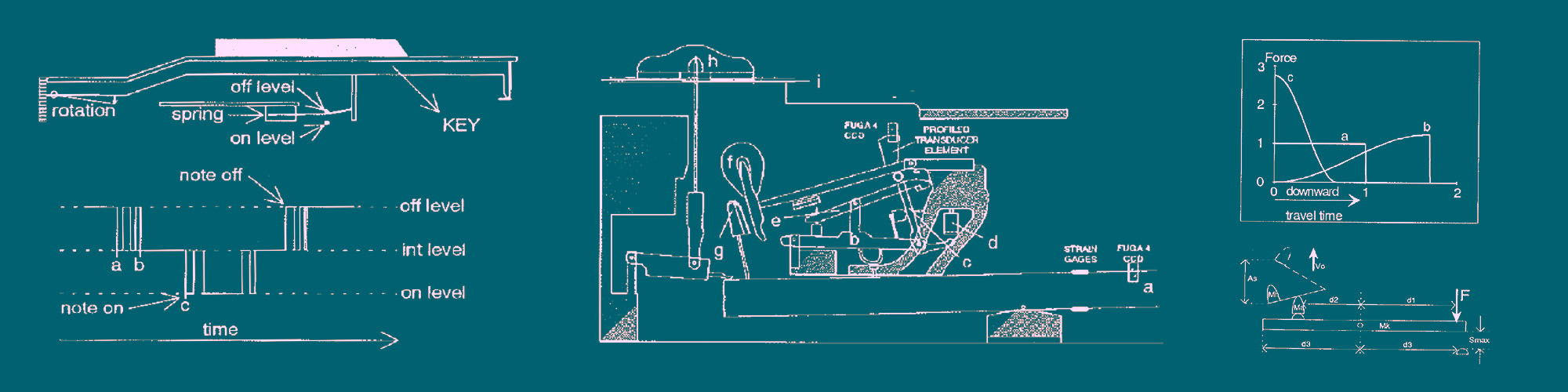

Speed is easy to measure. Physical devices like keyboard controllers report MIDI velocity by recording the time difference between two contact points.

Our brain may want to translate “velocity” to “how hard I hit a note” or even “how loud a note is” — but those things aren’t necessarily true. It’s a messy proxy instead.

It is true that the player intention is often to play louder (whether that’s via volume or opening up a filter).

Why does MIDI Velocity exist as a feature?

In short, it’s because pianos are velocity sensitive.

A piano hammer hits the strings with more force when the key strike is faster.

When a piano note is struck harder, it also modulates the timbre, making it brighter.

How does MIDI Velocity work?

The MIDI 1.0 spec details that a Note On is accompanied by a 7 bit velocity value that ranges from 0 to 127.

So velocity is the only other piece of information carried with a Note On.

The spec claims that the default value for a MIDI device that doesn’t implement velocity is 64 (spoiler, DAWs don’t tend to implement this part of the spec…)

Interestingly, a velocity value 0 is also synonymous with Note Off. So, pedantically, we only have 127 values for velocity, not 128.

Velocity in synths tends to be meh

Back to the rant thesis. Most of my complaints boil down to “the defaults are bad!” or “it’s hard to be musical without tedious configuration” or “it’s too sensitive.”

Here’s an attempt to unify those knee-jerk criticisms into something more constructive: I can’t rely on velocity as a way to add expression I move between devices and software. Sure, I could “get good” at playing one particular setup, but it’s very hard to have that experience translate to another setup without a lot of configuration.

It’s totally normal for different acoustic instruments to feel and behave differently from each other. Two piano brands have very different actions. The one you might prefer boils down to personal preference.

But software has a much…. wilder range of “feels” around velocity implementations. They range from weapons grade curve editors with specific controller compensation to rudimentary oversensitive linear implementations that just Make Synth Note Louder. Even between patches, a particular synth/sampler can differ wildly. In other words, there is much less consistency / predictability than within acoustic instrument categories.

So while I can nerd out and appreciate some well-tuned velocity curves on a particular setup, when I move across instruments, MIDI Velocity often “gets in the way” of being musical.

Velocity is complicated

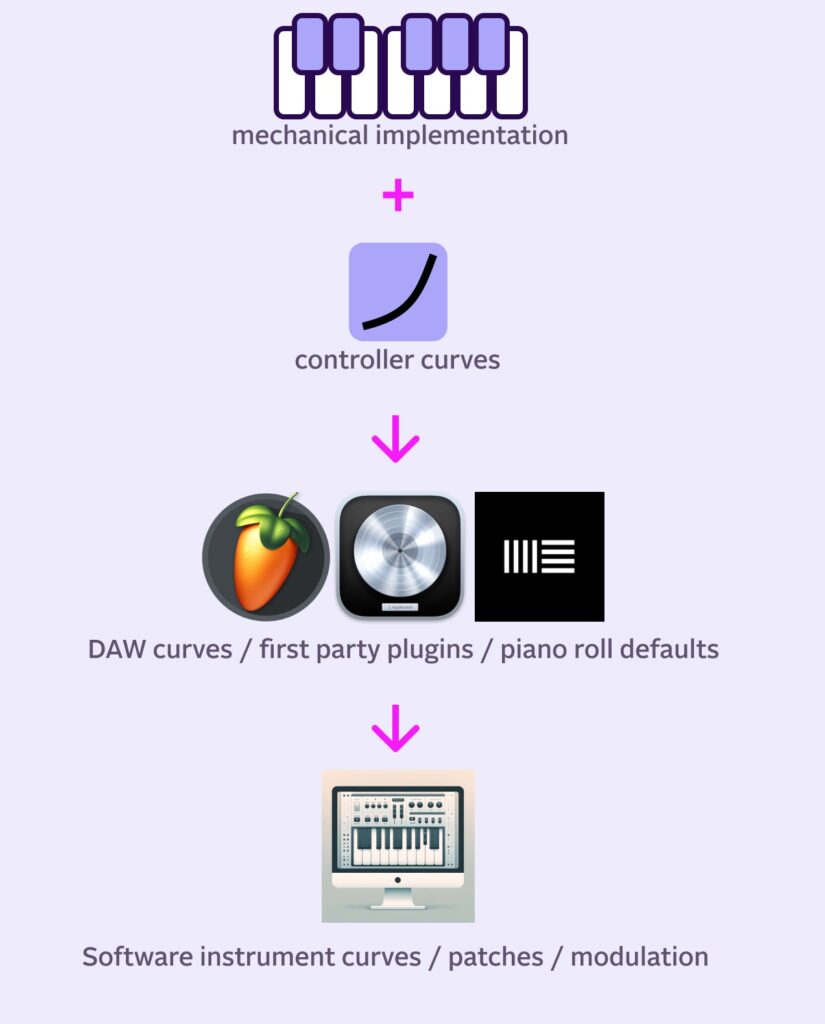

Although it seems like a simple proxy for “player expression,” velocity ends up quite convoluted. Consider these layers in play:

- The controller/synth keybed’s hardware “feel” and mechanical implementation of velocity.

- The controller/synth may have user selectable velocity curves.

- The DAW may have a velocity curve which interprets/translates incoming MIDI values.

- Manual piano roll inputting creates identical velocities by default.

- The software instrument may have a velocity curve interpreting the incoming values.

- The software instrument may have curve presets for specific controllers.

- The software instrument patch may or may not care about velocity.

- The software instrument patch may map velocity directly to volume, cutoff, or

nother params.

MIDI Velocity implementations are… quirky

The 0 to 127 velocity scale itself is an unusual historical artifact to have persisted to 2024! Humans are not used to thinking on this particular scale. It’s not as comfortable as 0-100. What value should be “normal” or “pretty loud?

DAWs guide users with defaults and color coding, but it’s one of those things where people end up just choosing an arbitrary value or even the maximum value — there’s no standard guidance or best practice.

Again, the specific software will interpret and interpolate the incoming values anyway, so it’s really hard to generalize in a useful way!

And as someone reminded me, many sample libraries in particular sound their best within a specific range. I have piano libraries that sound absolutely terrible when velocity is over 110 — and drum libraries that definitely sound their best at 127.

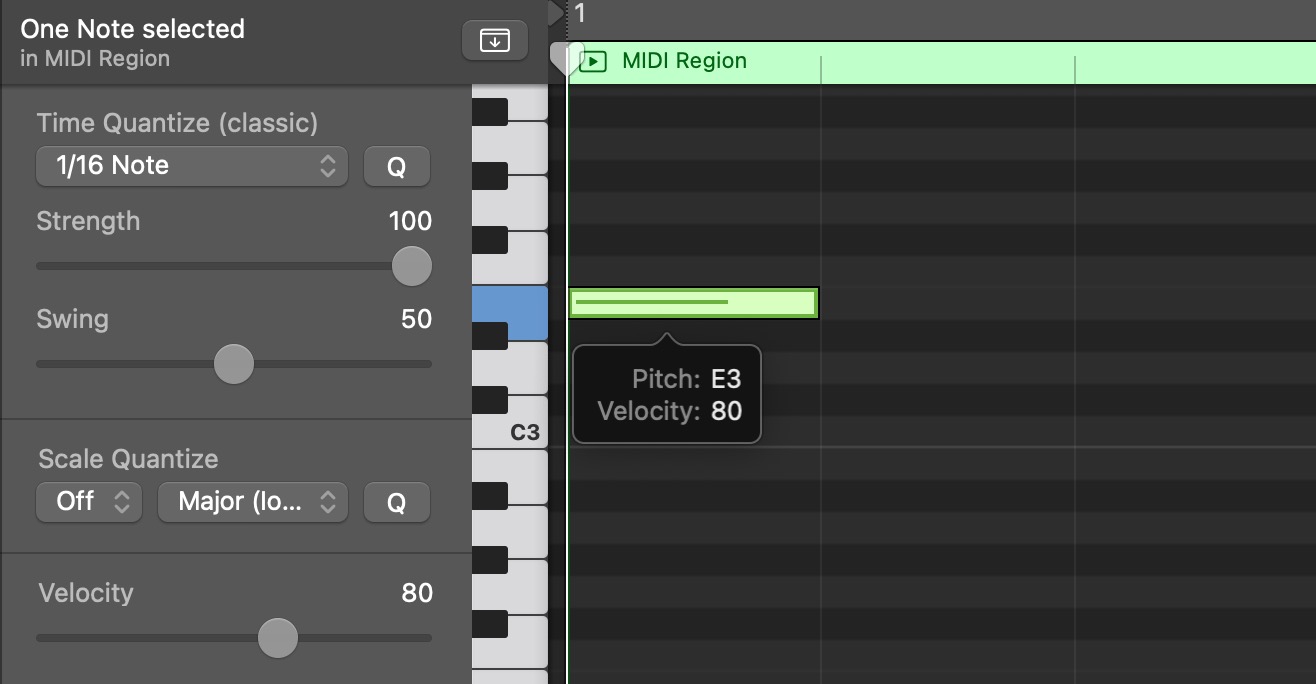

MIDI Velocity defaults in DAW Piano Rolls differ

When it comes to manually inputting notes, DAW behavior differs. None of them use the default 64 value specified in the spec. Most modern software instruments would sound too quiet at this velocity.

Apple Logic defaults input on a piano roll to a Velocity of 80 out of 127. You can change the velocity slider to some other set value, but this is what you get out of the box on a project.

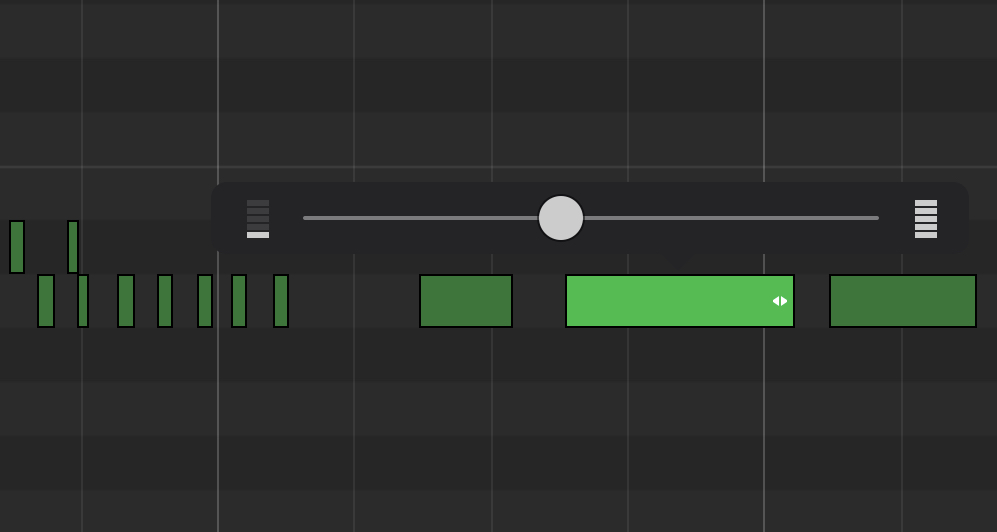

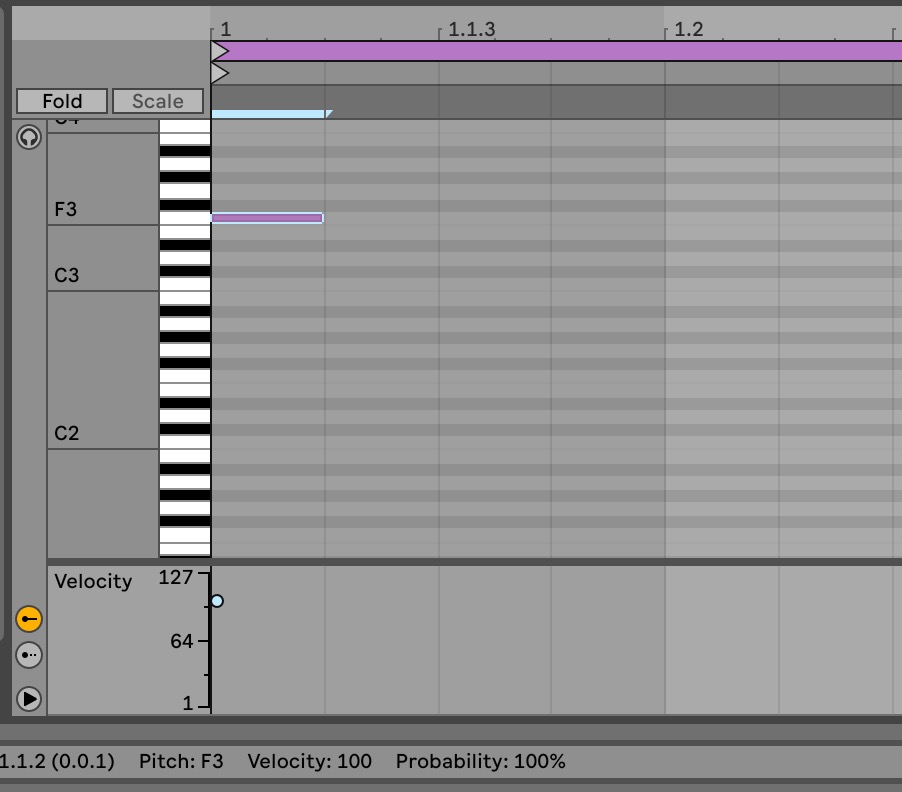

Ableton defaults you to 100 out of 127.

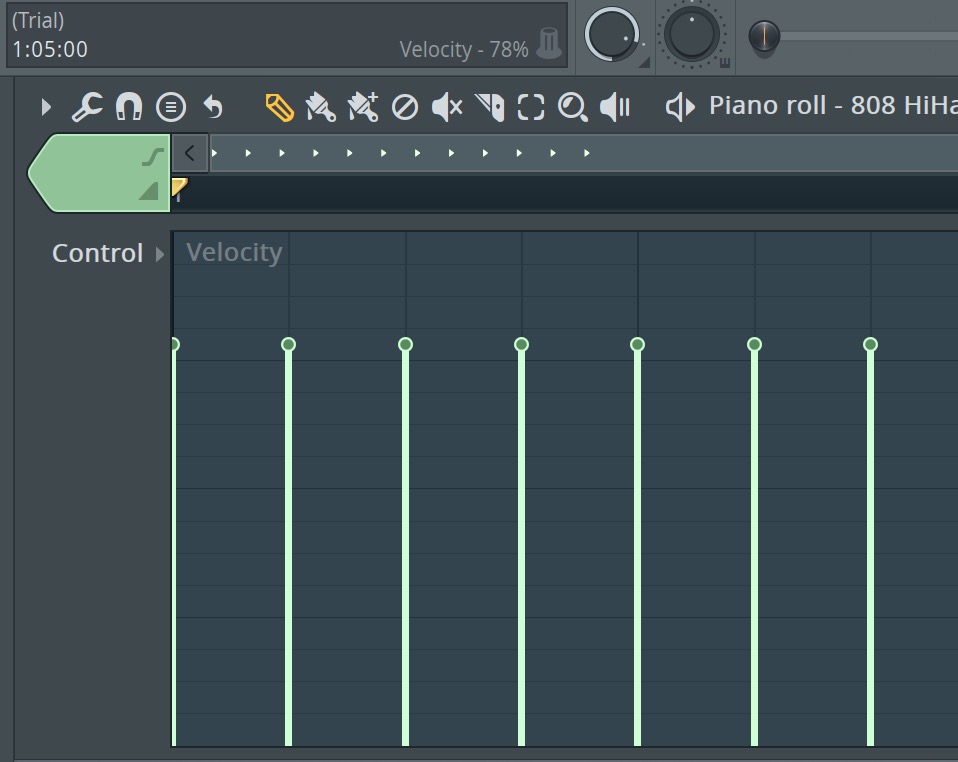

FL Studio’s default velocity is…. 78%, which seemingly translates into a midi velocity value of around 100 (I guess that math checks out-ish, even though 0 isn’t a usable value).

A DAW’s default doesn’t matter much. Most people stick to one DAW. But it does mean that different people on different DAWs will get different sounds out of their instruments when they use the default velocity (which according to my tiny poll, sounds common!)

MIDI Velocity Implementations in Software Synths

My favorite velocity control is in GarageBand:

A nice ranged slider with two “thumbs”. Easy to dial in by feel. No labels or values to worry about. Gives you what you want.

It’s the perfect level of granularity to add a bit more / less dynamics. It doesn’t troll you into thinking the finer details matter.

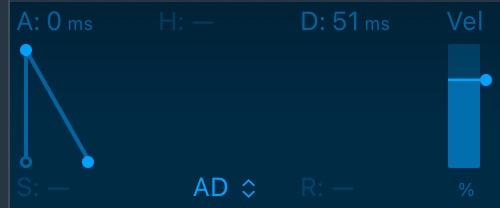

Cryptic implementations in synths are also quite common:

This is how the the Logic manual describes Quick Sampler’s velocity control:

Raising the slider value reduces the envelope minimum amplitude, with the difference being dynamically controlled by keyboard velocity. For example, when you set the Vel slider to 25%, the minimum envelope amplitude is reduced to 75%. The remaining 25% is added in response to the velocities of keys you play. A key played with a zero velocity results in an envelope amplitude of 75%. A key played with a MIDI velocity value of 127 will result in an envelope amplitude of 100%. When you raise the Vel slider value, the minimum amplitude decreases even further.

Logic Pro User’s Guide on Quick Sampler

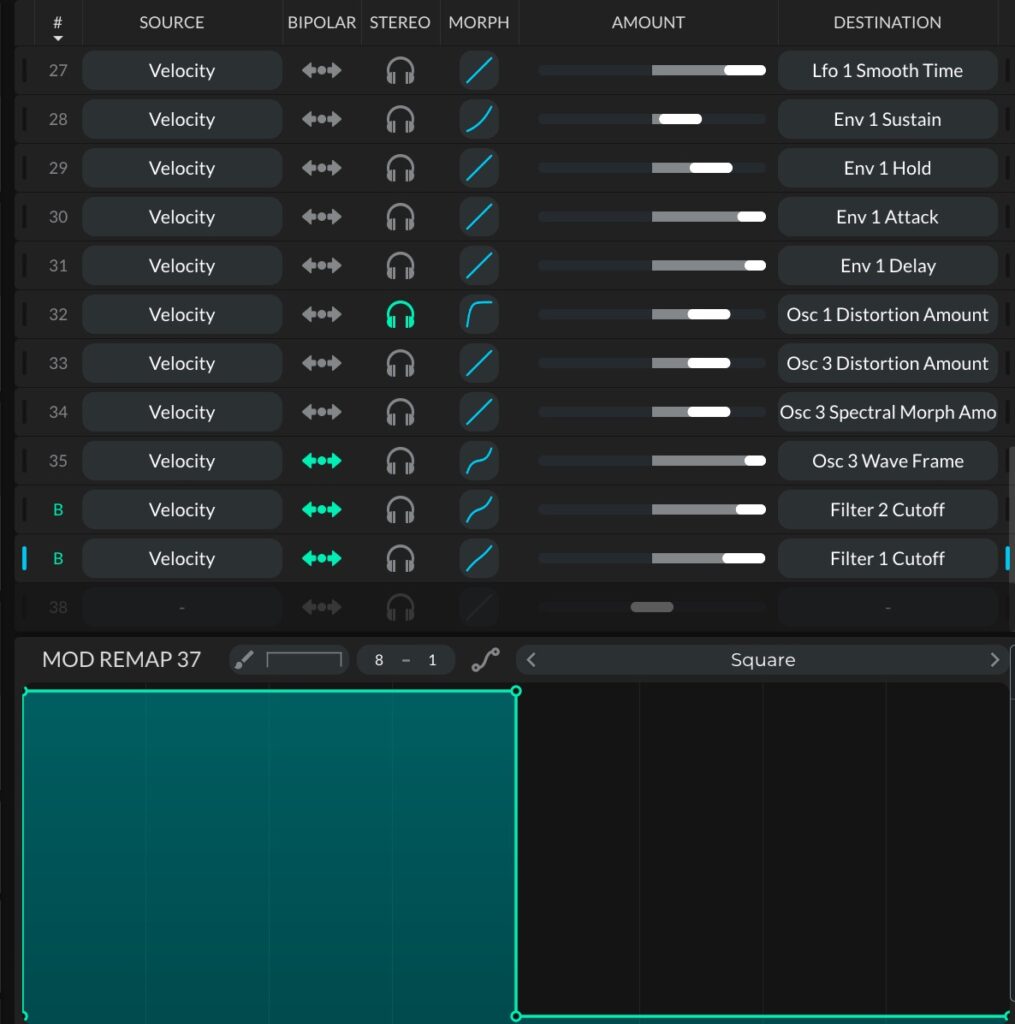

In Vital, velocity can be easily used as a source to modulate any parameter.

The mapping can then be edited to be non linear, ranged, and even have a custom mapping curve:

Velocity Curves

There’s nothing audio devs love to build (and sound designers to manipulate) more than curve input tools!

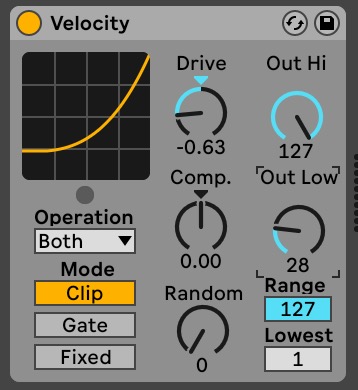

Logic has a Midi effect that lets you modify incoming velocities from a piece of hardware:

Serum shows you your last note (and what it remapped to) on the velocity curve, which is pretty fun:

Although I prefer my friend imagiro’s implementation (from an unreleased and upcoming project).

Velocity-less synth playing?

Here’s a good data point:

The Moog Model D reissues and the Moog Grandmother have velocity sensitive keyboards but don’t (by default) map velocity to volume. They leave it up to the user to route.

I’ll put words into Moog’s mouth and translate: “we support MIDI Velocity because we can, but it’s not a great default feature for monophonic synths.”

Notably, the example for velocity in the manual routes to the lowpass filter cutoff, not volume. This seems to be a silent best practice: For synth sounds, lowpass cutoff is the most often “desired” parameter to correlate with velocity. Mapping directly to volume usually just sounds…. clunky. Not expressive.

MPE doubles down

I recently bought an MPE enabled controller to see what I’m missing out on. It’s funny, I have people asking me to support MPE as a feature even though my synth isn’t available yet. I figured the features must be amazing if people are that hyped on it.

Unfortunately, I haven’t yet found musical contexts where MPE has been very useful. I’ve mapped my controller to various controls of my synth or other synths, but the “feel” is wild and difficult to control (perhaps my controller is overly sensitive, or the values it sends needs to be lowpassed somehow?)

In theory, MPE seems like a great solution to “player expression”. In practice, at least to me, it feels like a novelty. I don’t have any experience on an all-in-one hardware and software solution like Osmose or Seaboard, but my assumption is those may be an exception — a place where MPE can be a reliable a form of expression.

Otherwise, I’d characterize MPE as having the same exact problem as MIDI velocity, but with more axes! It’s like velocity squared: the experience varies wildly across different setups and patches. And due to this hugely variable experience, the MPE feature set can’t generally be relied on for player intent and expression.

Reliable Proxies for Dynamics Expression

But what do the pros do?

Composers scoring for film are “power users” when it comes to expressive MIDI playing. They input expressive string and orchestral parts with MIDI controllers.

Software made for film scoring uses CC #1 (mod wheel) for “dynamics,” which often means cross-fading between different source samples. In some cases, MIDI velocity is ignored, as variations in velocity would produce unnatural sounding instrument lines (big parallel to synth sounds here!).

In other cases, the CC dynamics value behaves as a “compressor” for incoming MIDI velocity values.

Additionally, CC #11 (expression) is mapped to volume.

This leads to composers often riding 2 faders while they play single note lines: one to control the “dynamics layer” and the other to control the “loudness.”

Conclusions and Solutions?

Did I learn that the ecosystem is even messier than I thought it to be? Yes!

But I’ve also learned:

- Velocity should not default map to volume on synths. Many synth sounds function similarly to legato orchestral instruments — suddenly changing the overall synth volume is undesirable in many musical cases.

- Velocity could default map to brightness + loudness in some way, whether that be a lowpass cutoff or some other mechanism. Percussive and staccato patches in particular work well with MIDI velocity mapped to loudness.

- Velocity is ultimately a per-patch concern. It’s not possible to generalize much beyond a patch and the two above statements.

- There are power users who absolutely will want to control dynamics in a more subtle way. Mod Wheel (CC#1) is then the drug of choice. There are some passionate MPE players, but they tend to be on very specific software and hardware stacks.

So, fine. I’m convinced. I’ll add this default feature. Someday…

Leave a Reply