Writing digital signal processing (DSP) code can be pretty tricky already. Does writing tests for audio even make sense?

Of course! DSP’s implicit emphasis on optimization can trick programmers into thinking one can’t create organized, tested and performant code. But DSP code is absolutely ideal for something like unit tests — after all, it’s just a bunch of numbers.

Working with Catch2? Check out my other tips in the Pamplejuce Manual.

Digital Spaghetti Processing?

Writing performant DSP can feel like a free pass to write some good ole’ spaghetti:

Don’t add too much function overhead!

Better not refactor, it might change the performance profile!

It’s ok to leave it like that, it’ll never be touched again!

Maybe I shouldn’t add another function here, it could get more expensive?!

Don’t name your variables anything longer than two characters!

Ok, that last one has nothing to do with performance and might make sense if you are referencing specific math, but… hey, DSP code tends to rival PHP for the most 1990s-esque low-hygiene code on the internet. I blame the academics! 🧑🏫

The problem? Brittle code makes refactoring and adding new features risky. Each and every change dooms you to manually check and QA everything to see what might have broken. This can be a significant deterrent to debugging, improving, maintaining, and building upon existing code.

Insert <Root of all evil>

We all care about performance, but it’s never a good reason to preemptively sacrifice readability, maintainability, or testability. “Let’s hope it performs well and then never touch it” isn’t a sustainable practice.

If you are anxious about performance, there’s only ever one prescription: actually measure it. Only then have will you have enough information to make an informed decision. Then you can happily keep your code in spaghetti format.

Don’t go the other extreme, either! DSP is extremely sensitive to memory layout. Wrapping everything in perfectly named nested classes which require loops within loops to access some floats scattered around memory is going too far! Aim to design your code to perform well (nice data layouts) AND be testable. It might feel like a high bar to start, and you might err too much on one side or the other, but it’s a journey, like anything else.

End to end / Null testing / Integration testing for DSP

Most of this article is about unit tests. But sometimes you just want to feed a whole signal in to verify the output of your algorithm.

In other paradigms, this is called end-to-end testing or integration testing. In audio, this translates to setting up your parameters in a certain way, running some audio (or playing a note) and verifying the output.

This is also a great way to regression test an entire algorithm. You’ll get yelled at when the output changes for any reason.

A good example for DSP code is null testing. Run your test, take the output and sum it with an inverted copy of the expected result and expect it to be within some threshold of 0.

Marius’ plugalyzer is a great tool to help with this. It’s a CLI DAW that even lets you render automation.

You can also look at approval tests for this. I personally haven’t used them yet, but it’s on my list to transition some of my tests that currently have hard coded numbers.

Writing unit tests for DSP

A unit test tests a small piece of code. In the most ideal world, this is one function that performs one task.

Examples:

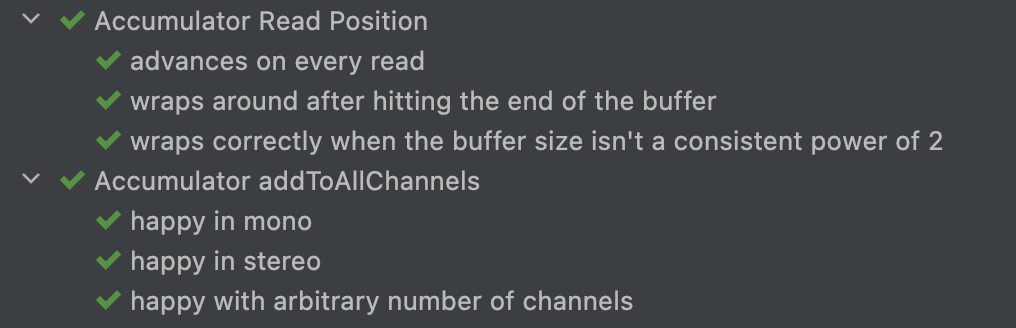

- Where a write pointer is after X samples.

- Whether or not a function returns true under certain conditions.

- How a piece of the code reacts when there’s no signal.

- The contents of a buffer after some operation.

I personally like writing DSP unit tests because they:

- Immediately let me know when and where I broke something (vs. waiting for it to be “discovered” and hunting down the “where”).

- Remove refactoring risk, fear and friction.

- Provide additional documentation and clarity for Future-Me around choices that would otherwise be implicit or hidden.

- Make it trivial to confidently reuse and extend code.

Ok, the rest of the article is about WHEN I like to unit test. Hopefully this will help you identify a places to get started writing a test or two, as they are really helpful in these situations.

I don’t write tests to achieve perfect coverage. Testing is about efficiency and giving you real-world safety and confidence, not metric-pleasing. Test what matters to you.

I write tests when I catch myself printing numbers to cout

Printing to cout (DBG in JUCE) to manually verify numbers is a pretty common way to sanity check or debug things. However, if I notice that I keep printing out numbers throughout a session, I immediately switch to tests.

20 minutes of test writing will save me hours of poking around now and in the future. Tests are the perfect tool for nailing down numerical requirements and making sure they work for all permutations and edge cases.

Examples:

- Converting between float values and UI-displayable strings like “20ms” (some examples).

- A buffer should be filled to sample number 256 and should be empty after that sample (see my JUCE test helpers).

- There should never be an audio discontinuity.

- A value in the buffer should never go above 1.0.

- A filter should reduces a frequency by a specific amount (the “ground truth” here can be arbitrary, just explicit codifying something you want, like, or sounds good).

I write tests when juggling sample rates and buffer sizes

I tried a few sample rates. It should work on the rest of em, right…?

-Me, every time.

Why hope when you can verify? With tests running against every sample rate, buffer size and platform, I can quickly see under which conditions things break. If tests weren’t able catch the problem this time — sprinkle in some new ones to act as future insurance.

Without tests, I might not catch the problem immediately enough to know what change introduced it. Someone else (a user) might catch the problem for me. I might have to work backwards from the UI to even reproduce the conditions.

I write tests when I’m threading the logical needle

Juggling myriad logical requirements in my head is prone to error. Like it or not, trees of conditionals get blurry in human nbrains.

Writing tests helps me get crystal clear about requirements and quickly exposes the edge cases. Writing tests encourages me to think about code branching more discretely and cleanly. It lets me thread the logical needle with more ease.

Bonus: the tests act as documentation, so future me (or others) can see how exactly the system is expected to behave. It’s there, in my editor, clear as day. No need to second guess when revisiting the code 6 months from now.

I write tests when I already know I’ll need to refactor

What happens when I need to add another feature to my synth engine, such as pitch modulation?

How do I make sure I didn’t break anything critical while moving code around?

When I do inevitably break something, how will I know which piece of the code actually broke?

Answer: Tests. (The alternative is excessive trial and error!) Being able to rely on the test harness to prove a refactor didn’t break anything is a great feeling.

I write tests when I’m too scared to start a refactor

Feeling safe to refactor the hairiest code is something I only feel with good coverage at my back.

The best thing about the fear of refactoring: once I put in the work in to add the first few tests, the fear dissipates.

There’s no better feeling that having that fear, diving in, and realizing your past self already has you covered (Ok, maybe deleting code is still a better feeling, but this is second best!)

When refactoring, don’t be afraid to delete old tests! There’s no point in having 30 broken tests that don’t apply anymore. No need to feeling the weight of failing tests hanging over you. The tests are there to serve you, not the other way around.

I write tests when I want to reliably trigger edge cases

Dropping into a debugger is very “whack-a-mole”, especially for real time audio. Tossing in temporary lines of code to trigger conditions, recompiling, setting the UI in just the right way, etc… lots of trial and error.

Once edge cases are known, I like to codify them in tests. Not only does this document them, but makes them instantly reproducible by slapping in a breakpoint.

The next time I have a bug or feature in the same area later down the road, everything is already setup: I can immediately drop into a debugger session.

I write tests when I want to codify an algorithm

Let’s say your DSP algorithm is special. It’s not exact math. It’s an art. You tuned it by ear. You want it to have a little bit of “play.”

That’s fine! You can still install some guard rails. Make sure you’ll never get INFs and NANs. Make sure the signals stays within bounds. Make sure there’s a rising edge when one is expected. Make sure it decays to 0 after X samples, or over Y ms across sample rates.

Defining boundaries gives you more confidence your algorithm is well behaved and will instantly catch regressions when refactoring down the road.

How to get started writing DSP tests

If you aren’t testing audio yet, I recommend trying out Catch2 for C++. You can also come hang out in the #testing-and-profiling channel on the Audio Programmer Discord.

If you are getting started with JUCE, check out Pamplejuce, my template on GitHub and the section of the manual on tests.

Leave a Reply